Browse AI Agents

Find the perfect AI agent for your specific needs

Trae (/treɪ/) is your helpful coding partner. It offers features like AI Q&A, code auto-completion, and agent-based AI programming capabilities. When developing projects with Trae, you can collaborate with AI to enhance your development efficiency. Comprehensive IDE functionalities Trae provides essential IDE functionalities, including code writing, project management, extension management, version control, and more. ## Use Cases * **AI Q&A** While coding, you can chat with the AI assistant at any time to seek help regarding coding, including asking the AI assistant to explain code, write code comments, fix errors, and more. For more information, refer to "Side chat" and "Inline chat". * **Real-time code suggestions** The AI assistant will understand the current code and provide suggested code in real-time within the editor. For more information, refer to "Auto-completion". * **Code snippet generation** By describing your needs in natural language to the AI assistant, it will generate the corresponding code snippets or autonomously write project-level and cross-file code. * **0 to 1 project development** Tell the AI assistant what kind of program you want to develop, and it will provide the corresponding code or automatically create related files based on your description. For more information, see "Builder". ## Feature * **Builder** The Builder mode can help you develop a complete project from scratch. You can seamlessly integrate it into your project development process. In the Builder mode, the AI assistant utilizes various tools based on your needs when responding. These tools include those for analyzing code files, editing code files, running commands, and more, making the responses more precise and effective. * **Multiple way of Context** When chatting with the AI assistant, you can specify content within Trae—such as code, files, folders, and the workspace—as context for the AI assistant to read and understand. This ensures that the AI assistant's responses align more closely with your needs. Here are three ways to specify context. Method 1: Reference the content in the editor as context Method 2: Reference the content in the terminal as context Method 3: Add context using the # symbol (code, file, folder, workspace) * **Multimodal input** You can add images during the chat, such as error screenshots, design drafts, reference styles, and more, to express your needs more accurately and efficiently. * **Source control** In Trae, you can use source control to manage changes to source code over time. * **Remote development** using SSH The SSH-based Remote Development functionality enables you to directly use your local PC to access and manage files on remote hosts.With this functionality, you can fully utilize all of Trae's features, including code completion, navigation, debugging, and AI assistant, to manage remote files, without needing to store the source code from remote hosts on your local PC.

Open-source autonomous AI coding assistant that embeds in VS Code. Acts as a fully collaborative AI partner that can plan tasks and then take actions (write code, run commands) to assist developers ## Use Case In-IDE automation of software development. Cline is used to handle entire coding tasks within VS Code – from generating new modules and fixing bugs to running tests or database queries – with minimal human intervention, functioning as a “co-developer” that can work on the project alongside you ## Feature • Plan-and-Act Agent: Unlike simple code assistants, Cline first devises a step-by-step plan for complex tasks and seeks user approval, then executes the steps automatically (“Plan” and “Act” modes) • Collaborative Explanations: It explains its reasoning and approach in real-time, asking for guidance when needed, so you’re always in the loop • Full VS Code Integration: Reads and writes to your project files, and even handles multiple files/repositories at once – e.g. it can update an entire codebase or create new files as required • Terminal & Tools Access: Can execute terminal commands, run tests, and interact with external systems from within VS Code . Via MCP (Model Context Protocol), it links to external resources (databases, documentation servers, etc.) to extend its capabilities • Multi-Model Support: Plug-and-play with top AI models – supports Anthropic Claude, Google Gemini (etc.) – giving flexibility in choosing LLMs • Safe Control: Includes a checkpoint system to review diffs of AI-made changes and rollback if needed, ensuring you maintain control over your codebase • Fully Extensible & OSS: 100% open source, allowing customization. An active extension ecosystem lets teams extend Cline’s abilities.

AI coding agent built for professional engineers and large codebases. Aims to be an “AI software engineer” that knows your entire codebase and workflow, enabling it to take on sizable development tasks autonomously. ## Use Case Enterprise and team software development at scale. Augment is used to handle complex projects with many repositories, assisting with tasks from planning and coding to integrating with issue trackers – effectively augmenting large dev teams’ productivity. It’s particularly suited for large companies looking to accelerate development while maintaining code quality across big codebases ## Feature • Massive Context Handling: Designed for very large codebases – can work with contexts up to 200K tokens, allowing it to reason about multiple repositories or millions of lines of code without manual context setup (a key differentiator) • “Memories” Personalization: Learns from interactions to adapt to your project’s conventions and your coding style over time, so suggestions align with your preferences (it literally builds a memory of past decisions). • Real-Time Team Sync: Unlike tools that get out-of-sync, Augment syncs with your Git in real-time. If a teammate commits code, the AI is aware of it instantly – ensuring the AI’s suggestions always reflect the latest code state. • End-to-End Integration: Integrates with tools like GitHub, Linear, Jira, Notion, Slack, etc. to go from ticket to pull request. For example, it can take a task from a Jira ticket, write the code, open a PR, and notify you on Slack. • Multi-IDE Support: Works with developers’ preferred environments – VS Code (general availability) and JetBrains IDEs (preview) – without requiring a fork. Augment preserves 100% compatibility with VS Code extensions • Enterprise-Grade Security: SOC 2 Type II compliant, with features like isolated on-prem deployment for companies. No training on your code (strict privacy guarantees) and extensive testing to ensure it makes safe changes • Advanced Coding Agent: Goes beyond autocomplete – it can autonomously plan and implement multi-step changes (e.g. migrating an SDK across a codebase, adding a complex feature), handling the heavy lifting while you supervise results.

Proprietary AI-powered code editor (a fork of VS Code) by Anysphere, designed to boost developer productivity by deeply integrating AI into the coding workflow. Cursor provides a familiar IDE experience with added superpowers for code generation, editing, and understanding. ## Use Case A full replacement for VS Code aimed at individual developers and teams who want AI assistance built into their IDE. Use cases include writing new code using natural language, refactoring large codebases faster, getting instant answers or documentation for code, and generally speeding up the coding of applications or scripts ## Feature ### Natural-Language Code Edits Allows developers to “write code using instructions.” You can describe a function or change in English, and Cursor generates or updates the code (classes, functions, etc.) accordingly ### Intelligent Autocomplete AI-powered autocompletion that predicts not just the next token but the next logical snippet or block. It anticipates your needs, often completing whole lines or blocks of code in a sensible way ### Codebase Queries: Understands the entire project – you can ask questions about your codebase (e.g. “Where is function X defined?”) or search code semantically. This helps with navigating and comprehending large projects ### Smart Refactor & Rewrite Can perform multi-file or project-wide rewrites. For example, instructing “rename this API endpoint across the codebase” or “upgrade this library usage” will prompt Cursor to apply changes in all relevant places intelligently ### Extension Compatibility Being a VS Code fork, it supports most VS Code extensions, themes, and settings EN.WIKIPEDIA.ORG – so developers don’t lose their ecosystem. This means you can use Git integrations, linters, debuggers, etc., alongside Cursor’s AI features. ### Privacy & Security Offers a Privacy Mode where code is not sent to servers The platform is SOC 2 certified, addressing enterprise security concerns for using an AI-powered editor.

A next-generation AI productivity tool with a two-dimensional canvas interface. Flowith enables multi-threaded, non-linear interaction with multiple AI agents and models in one workspace, aiming to help users achieve a “flow state” for deep work. ## Use Case Complex, multi-step problem solving and knowledge work. Flowith is used for research, brainstorming, learning, or any task where you might want to engage multiple lines of thought. For example, one can use it to gather and organize information (with an AI helping to fetch and summarize content), while another agent writes code or analyzes data – all concurrently on a canvas. It’s like an AI-powered sandbox for projects that involve text, code, and notes together. ## Feature * Canvas UI: Instead of a single chat, you have an infinite canvas where you can spawn multiple chat nodes. This visual layout lets you run parallel conversations or workflows (e.g. one agent writing an essay outline while another debugs code) , and you can see and connect different threads. * Oracle Mode (Agent): A powerful autonomous agent, “Flowith Oracle,” can plan and execute multi-step tasks automatically * It does task decomposition, uses tools, self-optimizes, and presents a reasoning chain, much like AutoGPT but more stable. You can give it a complex goal and watch it break it down and solve sub-tasks one by one. * Knowledge Garden: An integrated knowledge base that users can build. It ingests your files, notes, and URLs, breaks them into “Seeds” of info, and connects them. The AI uses this to give context-aware answers using your data. This is essentially a personal Second Brain for contextual retrieval during chats. * Multi-Model Support: You can utilize different AI models in different nodes (for instance, use GPT-4 in one conversation and another model in a different thread). Flowith can intelligently select the best model for a task or let you run models concurrently (via tool selection, as hinted in product materials). * Tool Integrations: Supports using external tools (web search, calculators, etc.) within conversations – the Oracle agent has unlimited tool invocation capability , so it can, for example, call APIs or run Python code if set up. * Non-linear Workflow: Because of its multi-threaded design, you can organize thoughts, to-dos, and outputs spatially. This makes it easier to handle elaborate projects (e.g. writing a research paper with sections in different nodes, or managing a coding project with separate agents for different functions).

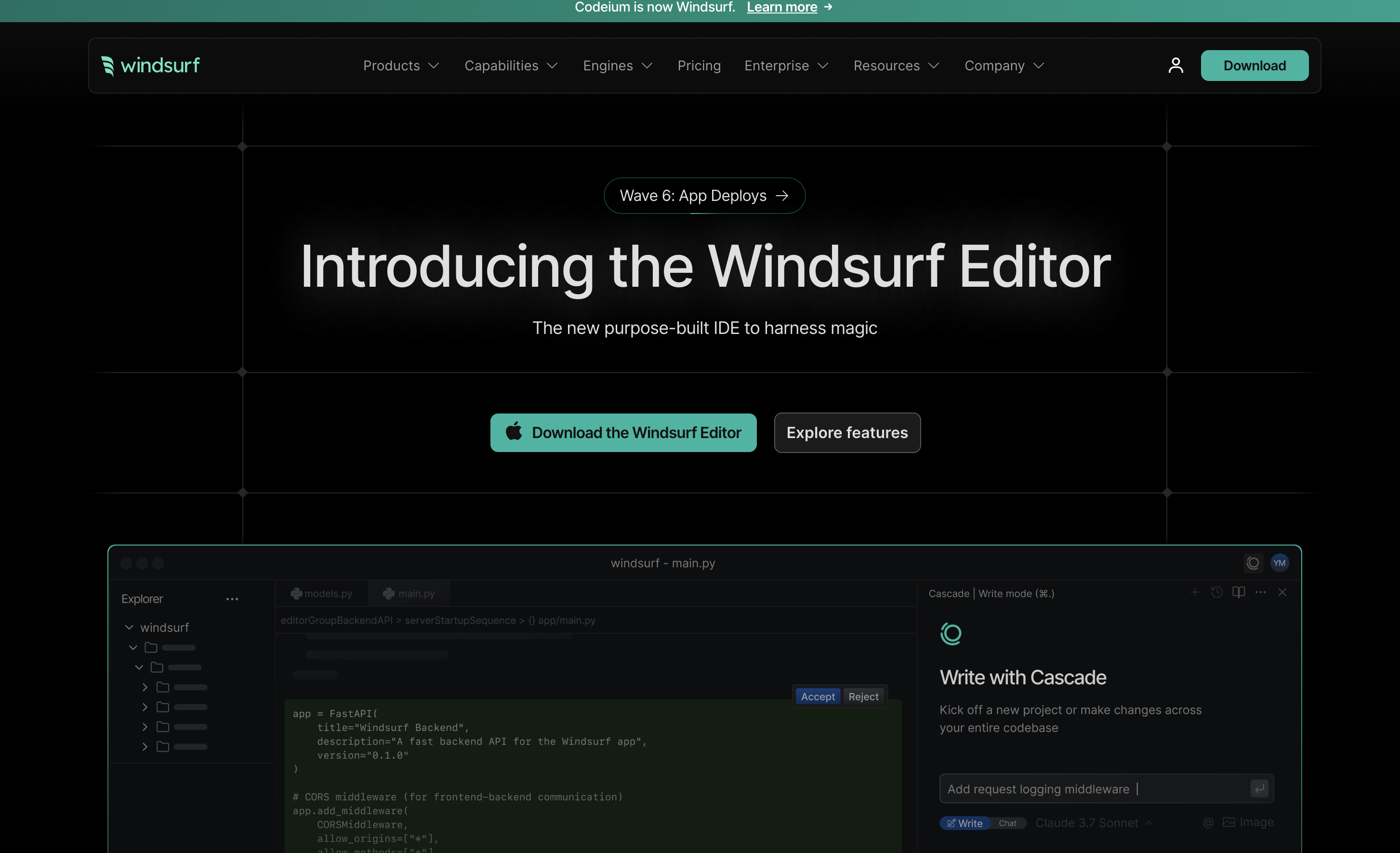

An AI-first IDE by the makers of Codeium. Windsurf combines a Copilot-like AI helper with agentic capabilities in a standalone code editor. It’s billed as the first AI agent-powered IDE that keeps developers “in flow,” meaning minimal context-switching – the AI deeply integrates with your coding process ## Use Case General coding and software development with tight AI integration. Use cases range from writing and editing code with AI assistance, to having the AI run commands, fix errors, and even deploy applications – all from within the IDE. It’s meant for developers who want an out-of-the-box editor that can handle not just autocompletion, but complex tasks (like debugging or multi-file refactoring) through an agent that works alongside them ## Feature * AI “Flows”: Windsurf introduces Flows, which blend Copilot-style collaboration with Agent-style autonomy. This means the AI can both assist when you type (as a coding partner) and take initiative to handle larger tasks when asked. The state is shared – the AI is always aware of your current code/context, creating a “mind-meld” experience * Cascade Mode: The core agent mode in Windsurf is called Cascade. It has full project awareness (able to handle production-scale codebases with relevant suggestions), and a breadth of tools. Cascade can suggest and execute shell commands, detect issues (like crashes or failing tests) and debug them, and perform iterative problem-solving across files. It’s designed to pick up where you left off, using reasoning on your actions to continue work seamlessly * Multi-File & Contextual Edits: Windsurf handles coherent edits spanning multiple files thanks to advanced indexing and context. For example, you can say “update all API calls to use v2 of the endpoint” and it will modify all relevant files appropriately. Its LLM-based code search (better than simple text/regex search) helps in finding all spots to change * Integrated Terminal & Tools: The editor suggests terminal commands and can run them for you (e.g., running tests, installing packages). It also hooks into linters and debuggers – if code generated doesn’t pass lint, it auto-fixes it. You can even get a live preview of a web application inside the IDE and instruct the AI to tweak the UI by clicking on elements * Developer UX Features: Includes Tab to Jump (predicts and moves your cursor to the next logical edit point), Supercomplete (enhanced autocomplete that guesses your next action, not just next tokens), in-line command prompts (pressing a hotkey to ask the AI to generate or refactor code in-place), and code lenses for one-click refactoring or explanations . These quality-of-life features make the coding experience smoother than vanilla VS Code. * MCP Extensibility: Supports the Model Context Protocol (MCP) to connect external tools/services, similar to Cline and others – meaning you can extend Windsurf’s agent with custom tools or data sources easily.

Open-source all-in-one KnowledgeOS (knowledge management system) that blends documents, whiteboards (infinite canvas), and databases, with a built-in AI assistant. AFFiNE’s motto is “Write, Draw, Plan, All at Once, with AI” – it lets you create content and organize knowledge freely, while an “AFFiNE AI” copilot helps generate and structure content ## Use Case Note-taking, knowledge management, and project planning with AI augmentation. Teams and individuals use AFFiNE as a replacement for tools like Notion, Miro, and Trello combined. You can take meeting notes or requirements documents and have the AI summarize or refine them, brainstorm on a canvas with AI generating ideas or images, and manage tasks or data tables with AI assistance. It’s useful whenever you need to organize thoughts or present information and want AI to help with generation or formatting. ## Feature * Unified Workspace: Fully merged document editor + infinite whiteboard + spreadsheet/database in one app. You can write rich text, sketch diagrams, and track structured data without switching tools – all data types interlinkable. * AI Writing Assistant: AFFiNE AI can generate and improve text content. For instance, it can expand a few bullet points into a detailed article or blog post, or rewrite text in a different tone and fix grammar. This helps users create polished docs faster. * AI Visualization & Planning: The AI helps turn outlines into visual presentations automatically (it can generate slide decks from an outline – currently in beta) . It can also summarize a document into a mind map or diagram, giving a structured visual summary. These features tie the AI to the whiteboard aspect (Canvas AI). * Task Management & DB with AI: AFFiNE has table/database views for things like Kanban boards or task lists. The AI can assist by auto-sorting, tagging, or prioritizing items (features like auto-tagging are coming soon). It can also answer questions about your tables/data or generate analytics summaries. * Real-time Collaboration: Multiple users can collaborate on the same AFFiNE workspace. Changes sync in real-time (like Google Docs/Sheets). The AI can operate in a collaborative manner too – e.g., a team brainstorming session on the canvas can involve the AI suggesting ideas live. All data is local-first (for privacy) with cloud sync as optional . * Templates & Extensibility: Comes with ready-to-use templates (planners, storyboards, note formats, etc.) to jumpstart projects. Being open-source, it’s extensible – the community can add plugins or custom integrations (and AFFiNE builds in public with community feedback).

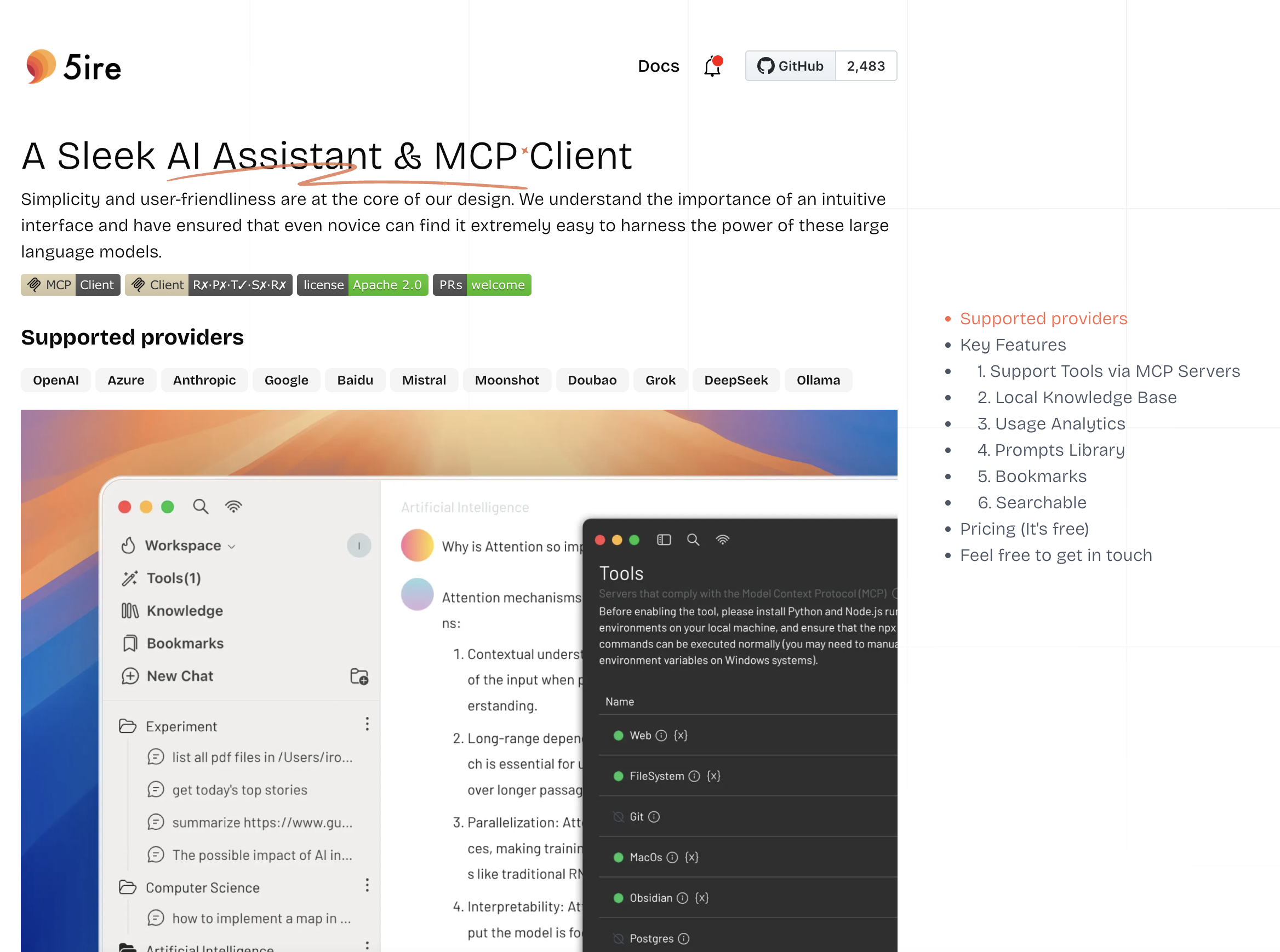

Open-source, cross-platform desktop AI assistant and MCP client. 5ire provides a user-friendly chat interface to a variety of AI models (local and cloud) and allows tool use via the Model Context Protocol – all running on your own machine. It’s like having a customizable ChatGPT that can plug into your files and apps. ## Use Case Acts as a personal AI agent for both coding and general purposes. For example, a developer can use 5ire to load their project and ask the AI to read files, generate code, or debug errors (since 5ire can use tools to access the filesystem). Non-developers might use it to analyze documents or automate workflows (through plugins). It’s essentially an extensible AI assistant you control locally, suitable for anyone who wants advanced AI capabilities (coding help, data analysis, etc.) without relying on a cloud service’s interface. ## Feature * Multi-Model Hub: Connects to many AI providers out-of-the-box – OpenAI (GPT-4, GPT-3.5), Anthropic (Claude), Google PaLM, Baidu, local models via Ollama, etc. * You can choose or switch models for different tasks and even run open-source models on your machine. * Tool Use via MCP: Supports Model Context Protocol, a standard for tool plugins. 5ire comes with the ability to use tools like file system access (read/write local files), get system info, query databases or APIs, etc., through MCP servers * There’s an open marketplace of community-made MCP plugins, so your AI can be extended to do web browsing, execute code, and more – similar to ChatGPT Plugins but completely under your control. * Local Knowledge Base: Built-in support for ingesting documents (PDF, DOCX, CSV, etc.) and creating embeddings locally using a multilingual model. This lets you do Retrieval-Augmented Generation on your own data without sending it to the cloud – you can ask questions about your files and 5ire will answer using that content. * Conversation Management: You can bookmark important conversations and search across all past chats by keyword, making it easy to retrieve past insights. Even if you clear the chat, your saved knowledge can persist via bookmarks. * Usage Analytics: If using paid APIs, 5ire tracks your usage and spend for each provider, so you have transparency on how many tokens/calls you’re using. This helps optimize costs when leveraging multiple models. * Extensible & Cross-Platform: Works on Windows, Mac (brew cask available), and Linux. It’s open-source (TypeScript), allowing developers to contribute or fork. You can even build custom apps on top of 5ire or integrate it with other systems (the team provides a development guide)

CherryStudio is an all-in-one AI assistant platform (desktop application) that consolidates multiple AI services into one seamless experience. It supports multi-model dialogue (various LLMs), has a built-in knowledge base, offers AI art generation, translation tools, and more – all with a customizable, user-friendly interface. Think of CherryStudio as a Swiss army knife for AI tasks: whether you want to chat with GPT-4, generate an image, ask a specialized domain expert, or translate a document, it can do it within one unified environment. ## Use Case Aimed at both professional and personal use, CherryStudio shines in scenarios where users need a versatile AI toolkit. For example, researchers and students use it to manage and query large knowledge bases (ask questions against PDFs or notes), writers use its multiple models to get diverse creative ideas, developers leverage code assistants, and business users might use industry-specific chatbots for analysis. Its applicable scenarios include knowledge management and Q&A, multi-model content creation, translation and office productivity, and creative design through AI art . In practice, a user could have CherryStudio open as a daily assistant: one moment using a finance bot to summarize market data, next generating an image for a presentation, then translating that report into another language – all without switching apps ## Features Multi-Model Dialogue: CherryStudio allows querying multiple AI models simultaneously. You can pose a question and get answers from, say, GPT-4, Anthropic Claude, and other models side-by-side. This one-question-multiple-answers feature helps compare model outputs and find the best result. It also supports chatting with models from different providers in separate tabs or even concurrently in one session. Assistant Marketplace: It includes a marketplace of pre-built AI assistants (over a thousand as of 2024) covering domains like translation, programming, writing, education, etc.. These are like persona or task-specific bots (for example, a “Travel Planner” assistant or a “Java Debugger” assistant) that come with preset prompts and configurations. Users can also create and share their own custom assistants. Integrated AI Utilities: CherryStudio goes beyond chat – it has built-in AI art generation with a dedicated drawing panel for text-to-image creation . It also provides translation tools, including on-the-fly translation in conversations and a special translation panel for documents. There’s support for summarization and explanation of long text as quick actions (select text and summarize, etc.) . In short, it combines many AI use-cases (image gen, translation, Q&A) in one app. File and Knowledge Management: Users can upload files or whole folders into CherryStudio and build a local knowledge base. The system will index documents (PDFs, Word, etc.) and allow AI queries over them . It also keeps a unified history of all chats and generated content. Features like global search let you quickly find anything said or any info stored across all your assistants and files .This is great for research and retrieving past answers or data. Support for Multiple AI Providers: CherryStudio can connect to many AI model APIs – OpenAI, Azure, Anthropic, Baidu Wenxin, etc. – as well as run local models on your hardware . It provides a unified interface to all these providers, with features like rotating API keys to avoid rate limits and automatic model list retrieval. Essentially, it’s model-agnostic: you can plug in your API keys from various services and use them all in one place. Highly Customizable UI: The platform is built with user customization in mind. It supports custom themes and CSS, different chat layout styles (message bubbles vs. chat list), Markdown rendering (with math formulas, code highlighting, etc.), and exporting chats to Markdown/PDF for sharing . Users can tailor the interface to their preference, even creating or installing themes (a community has made themes like “Aero” and “PaperMaterial”). Cross-Platform & Extensible: CherryStudio runs on Windows, macOS, and Linux with a simple installation (no complex setup) . It’s also open-source and extensible: advanced users can develop plugins or even contribute to its code. Planned features (according to the roadmap) include JavaScript plugin support and a browser extension for quick AI actions on webpages , as well as mobile apps in the future . This roadmap indicates a commitment to broadening its ecosystem. ## Maturity Active Open-Source Project. CherryStudio emerged in 2023 and has been under continuous development. While already quite feature-rich, it is still approaching its first full version release (an official v1.0 is noted as a TODO) The project is very active – updates are frequent (the documentation is updated regularly, and the developers are responsive to issues). Because it’s open-source, power users often contribute improvements. In terms of stability, it’s generally stable for daily use, though as an evolving desktop app, occasional minor bugs can occur. Overall, it’s at a late-beta/near-mature stage: widely used by thousands of users, but still rapidly adding new features and polish. ## Popularity CherryStudio has a strong following, especially among AI enthusiasts and developers in Asia. It has garnered over 22,000 stars on GitHub – a testament to its popularity as an open-source project. This high star count places it among the top community AI tools. It also maintains active user communities on Telegram, Discord, QQ, and other platforms for support and feedback. Many users gravitate to CherryStudio because it consolidates what would otherwise require several different AI apps or browser tools; this convenience and its open nature have driven adoption. It’s frequently recommended in AI forums as a go-to app for those who want more control over their AI experience (like using custom models or keeping data local). While not as publicized in mainstream media, within its niche CherryStudio enjoys a reputable status and growing global user base through word of mouth. Cost: Free and Open-Source. CherryStudio is released under an open-source license (MIT/Apache) and is free to download and use. There is no paid tier for the software itself; the developers make it available to everyone. Users might incur costs from the third-party AI APIs they connect (for example, you still pay OpenAI for API calls if you use your OpenAI key), but CherryStudio doesn’t charge anything on top. The project sustains itself through community support (users can sponsor the project on GitHub or similar). For companies or advanced users, the source code being open means it can even be self-hosted or customized internally at no cost. The free cost is a major attraction, especially compared to commercial AI agent platforms.

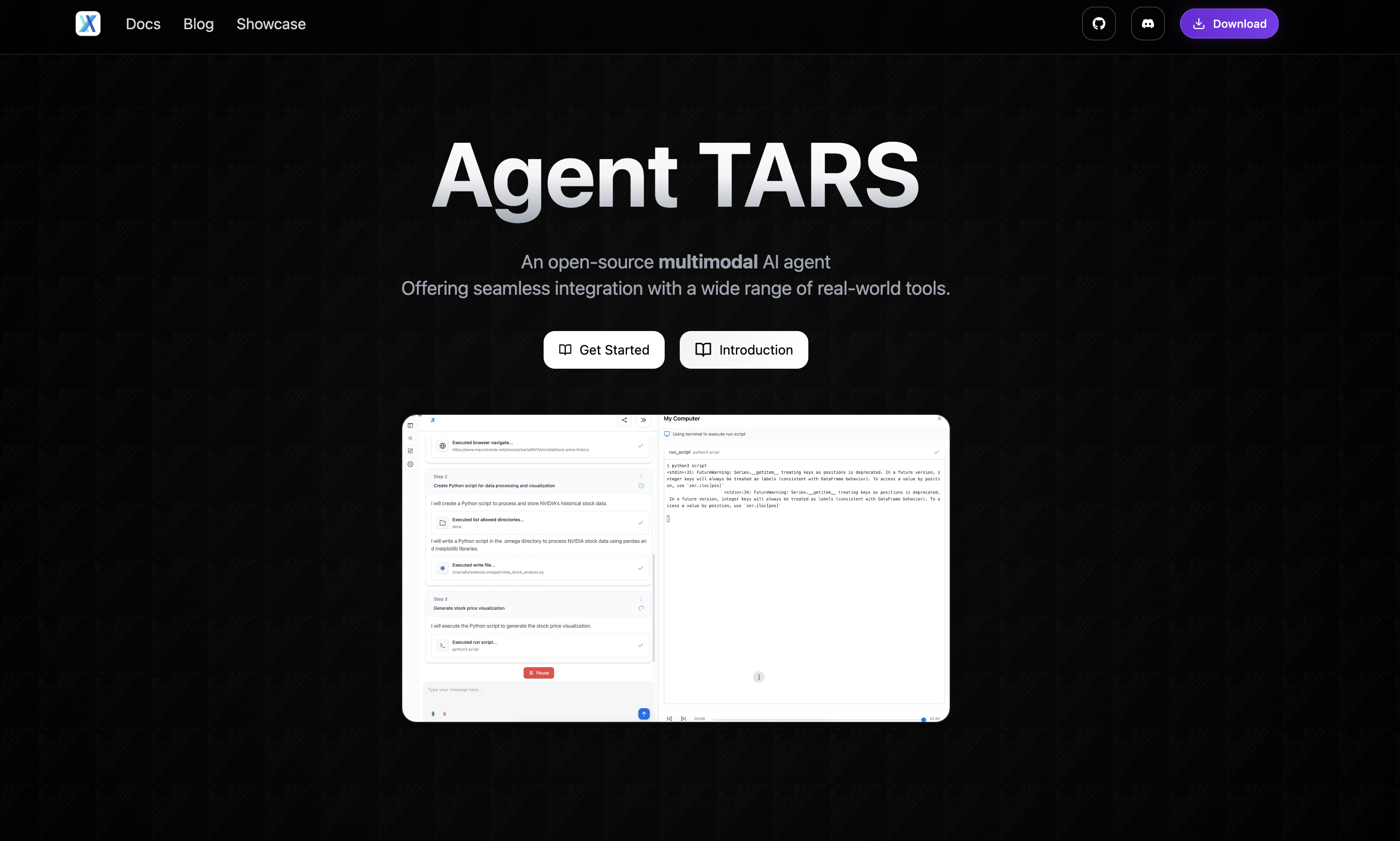

Agent TARS is an open-source multimodal agent designed to revolutionize GUI interaction by visually interpreting web pages and seamlessly integrating with command lines and file systems. It is designed for workflow automation, going beyond static chatbots by making its own decisions and evolving over time. ## Use Case Primarily used for web-based task automation and research assistance, it can orchestrate complex tasks such as deep web research, interactive browsing, information synthesis, and other GUI-driven workflows without continuous human input . This makes it useful for gathering and analyzing information across the web or performing repetitive browser actions on the user’s behalf. ## Feature * Advanced Browser Operations: Can perform sophisticated multi-step web browsing tasks (e.g. automated deep research, clicking through pages) using visual understanding of pages * Comprehensive Tool Support: Integrates with various tools – search engines, file editors, shell commands, etc. – enabling it to handle complex workflows that combine internet data with local operations * Enhanced Desktop App: Provides a rich UI for managing sessions, model settings, dialogue flow visualization, and tracking the agent’s browser/search status * Workflow Orchestration: Coordinates multiple sub-tools (search, browse, link exploration) to plan and execute tasks end-to-end, then synthesizes results into final outputs * Developer-Friendly Framework: Easily extensible for developers – it works within the UI-TARS framework, allowing customization and new workflow definitions for agent projects ## Maturity Technical Preview – Agent TARS is in an early development stage and not yet stable for production use . It was first announced in March 2025 and is evolving rapidly with community contributions.